The Test Automation Roadmap is a model that splits the complex topic of test automation into five mindsets. This article covers the Multi-team mindset. This article is part of a bigger story on the Test Automation Roadmap. You should start with the introduction.

Test with neighbors

In the Multi-team mindset, you automate tests with the applications of multiple teams in (relatively) small shared environments. Multi-team is not end-to-end testing but testing with your neighbors. You try to include as few teams in the shared environment as possible. The more teams you add to the shared environment, the fewer tests you make.

It’s part 5 of this article series, but it’s the first time we talk about using a shared test environment. There is quite a bit of overlap between the Multi-team and Cherry-pick mindsets. When talking about the testing mindset, most people use either the Cherry-pick mindset or the Multi-team mindset.

Important

The Multi-team mindset focuses on integration between applications. Testing between applications inherently results in a lot of complexity.

Your focus in this mindset is maintenance. Every test you make in this mindset should create as much value as possible with the least maintenance. Because of this, your tests and test data should be simple. This simplicity prevents you from drowning in the inherent (maintenance) complexity of multi-team testing.

Problems

Even with all the focus on maintenance, it will be your biggest problem. The issue is inherent to testing with multiple teams in a shared environment. Including more teams in the shared environment will increase the test complexity exponentially. You’ll face fewer problems if you include fewer teams in your chain.

The underlying reason for the exponential complexity increase is that your tests (or test data) are spread across multiple teams. You rely on the other teams for your tests to pass. Every team has its priorities, which rarely agree with each other. Aligning with other teams makes it time-intensive to achieve anything.

Shared environments often face performance issues caused by weak server hardware. When making tests, you also tend not to care about them being fast, resulting in even more performance issues.

Then there is the elephant in the room: Stability. Shared environments tend to be unstable because teams release untested versions of their application multiple times a day. Environment issues also don’t get priority, allowing them to linger and fester.

Tools & Techniques

A great place to start is by diving into Risk-Based Testing. Assess your risks and write your tests based on the risk-to-maintenance ratio. Any test you write should have a high risk-to-maintenance ratio. When assessing the maintenance load, don’t forget about test data. Data often causes the biggest maintenance load of these tests.

Actively limit the scope of your testing efforts. You can limit the scope by reducing the size of the shared environment (e.g. fewer teams) or by reducing the number of tests. Don’t try to test all the things with all the teams.

Some general advice:

- Keep any test you write as maintainable as possible. The same applies to test data.

- Keep your shared environments as small as possible. Including more teams allows you to test a bit more but exponentially increases the maintenance load. As a general rule, it’s not worth it.

- Actively communicate with other teams in the shared environments.

- Prefer API tests over GUI tests as they are easier to maintain and debug.

- When writing API tests, stick with one of the following categories:

- Written in a programming language

- Created in an application like Postman, ReadyAPI, etc.

- When writing GUI tests, only use tools where you write tests in a programming language.

- Pipelines are a great way of running your tests periodically. A continuous integration pipeline can also be a good idea.

- When using pipelines, plan for environment instability. People will eventually ignore a pipeline with random failures. Automatically retrying failing tests can help a lot to improve the reliability of your pipeline.

Position in the model

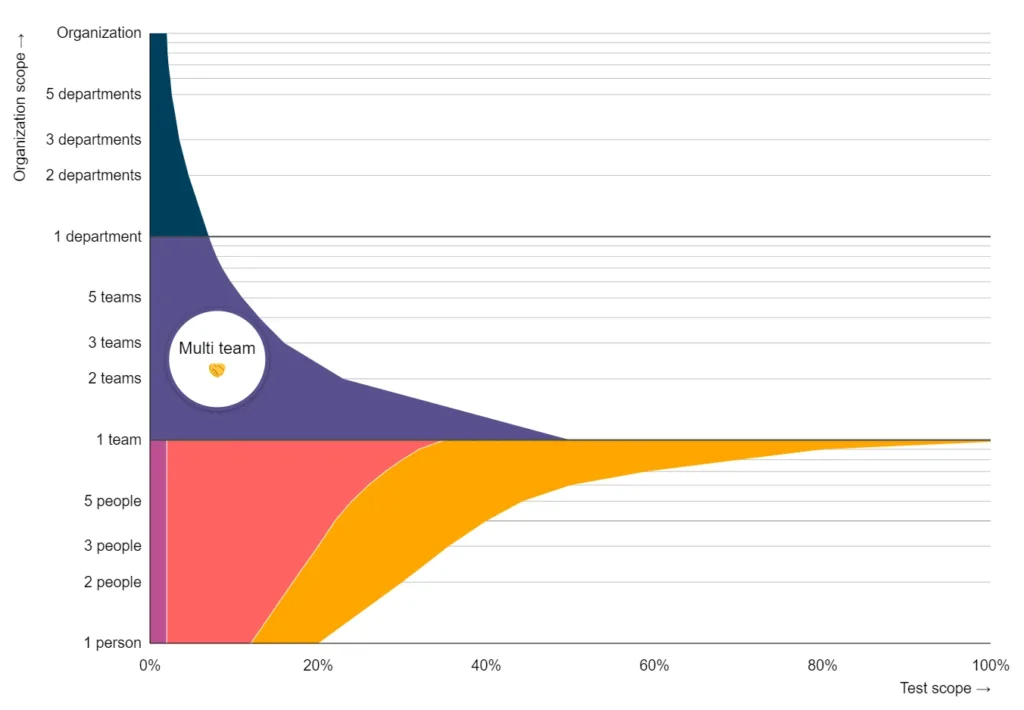

With the multi-team mindset, you won’t automate many tests. Instead, you focus on the ones that add a lot of value with minimal maintenance load. The curved right border on the Multi-team mindset shows us that we run fewer tests if we add more teams to the shared environment. You can test a lot with many teams, but the maintenance load will become too much to handle. If you want to test more, remove teams from the shared environment. If you’re the only one left in the environment, adopt the Cherry-pick mindset instead.

Real-world example

A short story follows about how some teams used the Multi-team mindset in a company of about 45000 employees (according to LinkedIn). 8 teams worked on the Customer Relation Management application. All customer-facing employees (about 10000 a day) used this application every day. The application also acted as a major data source for over 30 other applications in the organization.

This critical application was released once every quarter. Every release required seven weeks of manual testing. Three weeks for end-to-end tests and four weeks for acceptance tests. The organization did not like this, so they pushed for a release every two weeks.

The teams took all manual test flows and automated them in the GUI. This end-to-end GUI-based test suite allowed them to release every two weeks. They tried to use the multi-team mindset but ended up too far to the right of the model and too far to the top of the model. They had issues coordinating the release schedule with other applications, test stability, test duration, and other things.

After reaching the two-week-release milestone, they wanted more. Why not once a week? Or every day? Maybe even every story? To achieve this, they needed to test without other applications and involve fewer of their own teams (moving down in the model). To test without other applications, they changed their way of working. They did not start work on a story until other applications were ready for the change. After making the change, they would give other applications a single day to run tests from their end in a shared environment. This moved the responsibility of testing other applications to the teams managing those other applications.

They also needed to test fewer things with the Multi-team mindset (moving left in the model). They did this by moving a lot of testing efforts to the Cherry-pick and Everything mindsets. They only used the Multi-team mindset when a change might impact another team. All other tests ran in isolation.

After all these efforts and changes, they ended up with a sustainable way of working that included the Multi-team mindset. However, this approach also requires other test suites that use the Cherry-pick or Everything mindsets. After all these changes, these teams could safely release as often as they wanted.

Conclusion

The Multi-team mindset is about testing in small shared environments with your neighbors. Testing in the same environment with multiple teams increases your maintenance load and hurts test stability. In the Multi-team mindset, you strive for maximal value with minimal maintenance. Only make simple tests of which you can bear the maintenance load.

The bigger the shared environment, the more you’ll suffer from poor stability and performance. Larger environments also increase the maintenance load exponentially. Keep shared environments as small as possible.

In the real world, the shared environments are often way too big. When in doubt, make your shared environments smaller.